Personalized Mobile Learning: An Adaptive Testing Approach using Machine Learning Algorithms

Personalized Mobile Learning: An Adaptive Testing Approach using Machine Learning Algorithms

Abstract

The popularity of mobile devices has brought a revolution to learning, and mobile learning has become an important part of modern education. However, most existing mobile learning systems cannot meet the personalized needs of each learner, which results in low learning effect. This paper presents an adaptive mobile learning testing approach that uses machine-learning algorithms to deliver personalized tests so as to improve users’ performance. We present an adaptive algorithm that considers user preferences and proficiency level. The experiment on a real-world mobile language learning system demonstrates the effectiveness of our approach.

1. Introduction

Mobile learning has become an increasingly popular mode of education, offering flexibility and convenience to learners all over the world. However, in most cases, these systems are not able to provide personalized learning paths according to each learner’s knowledge level. Adaptive testing is a method that involves adapting the difficulty and content of tests according to students’ responses in order to realize personalized testing. The aim of this paper is to determine how adaptive testing can be realized in mobile learning using machine learning algorithms so as to both realize improvements on the testing process and enhance learners’ learning experiences.

The integration of adaptive testing into mobile learning will have an effective impact on the improvement of each individual’s learning experience. By continuously measuring students’ knowledge levels, adaptive testing applications may determine users’ strengths and weaknesses more precisely than conventional or other online assessment methods. Based on this information, personalized contents can be supplied since test items provided by adaptive system are different for each student depending on their performance or course expectation. Additionally, one advantage for adopting adaptive tests could be reduced user frustration since users know that they cannot get every question in a test due to its designs but still perform much better instead.

The possibilities in adaptive mobile learning testing are endless, and therefore, this paper will look at the current landscape, research, and applications of adaptive testing in mobile learning. This paper gives a full picture of the role of adaptive testing in mobile learning and how it can change the way we learn by looking at its impact on learning outcomes.

2. Literature Review

Many studies on adaptive testing in mobile learning have been conducted, which aimed to prove that it enhances learning outcomes and engages learners. For example,

, contributed to an adaptive mobile learning system that applies item response theory to change the level of difficulty of the assessments based on the performance of the learner . Contributed to a mobile learning platform that uses machine learning algorithms for individual feedback and recommendations . Proposed deep learning algorithms for adaptive testing in mobile learning, while investigated natural language processing for adaptive testing. On the other hand, the adoption of machine learning algorithms offers individual feedback and recommendations. Deep learning algorithms are used for adaptive testing in mobile learning and natural language processing is used for adaptive testing . It has been found that adaptive testing reduces anxiety and increased self-efficacy in mobile learning . It is used for offering learners adaptive learning paths and being probed for accessibility and inclusivity in learners with disabilities . A framework for adaptive testing in mobile learning was forwarded that contained modeling of learners, item banking, and feedback mechanisms . Gamification and adaptive testing in mobile learning were also explored, and it was found that it increases learner engagement and motivation .3. Methodology

We are in the process of developing a mobile learning platform, complete with an adaptive assessment module. This module will run a machine learning algorithm to view learner performance data, which includes their responses to previous questions, time spent on each query, and their learning style. It then adjusts the following question in terms of difficulty and content to that which best matches the learner's knowledge and learning style.

3.1. Algorithm

We hereby present a new algorithm known as Adaptive Mobile Learning Testing, which puts forth the idea of fusing item response theory with machine learning in individualized assessment. The following constitutes what is termed as the AMLT algorithm.

1. Item Bank. It implies a question bank of different levels of difficulty and topics.

2. Learner Model. A machine learning model that will process the learner performance data for updating knowledge and learning style information.

3. Adaptive Engine. This is what selects questions within an item bank, based on how well the learner model is fitting, and it changes the level of difficulty and contents of the following questions.

4. Implementation

The AMLT algorithm is implemented in Python, along with the sci-kit-learn library. The code has been converted to Flowchart as shown below:

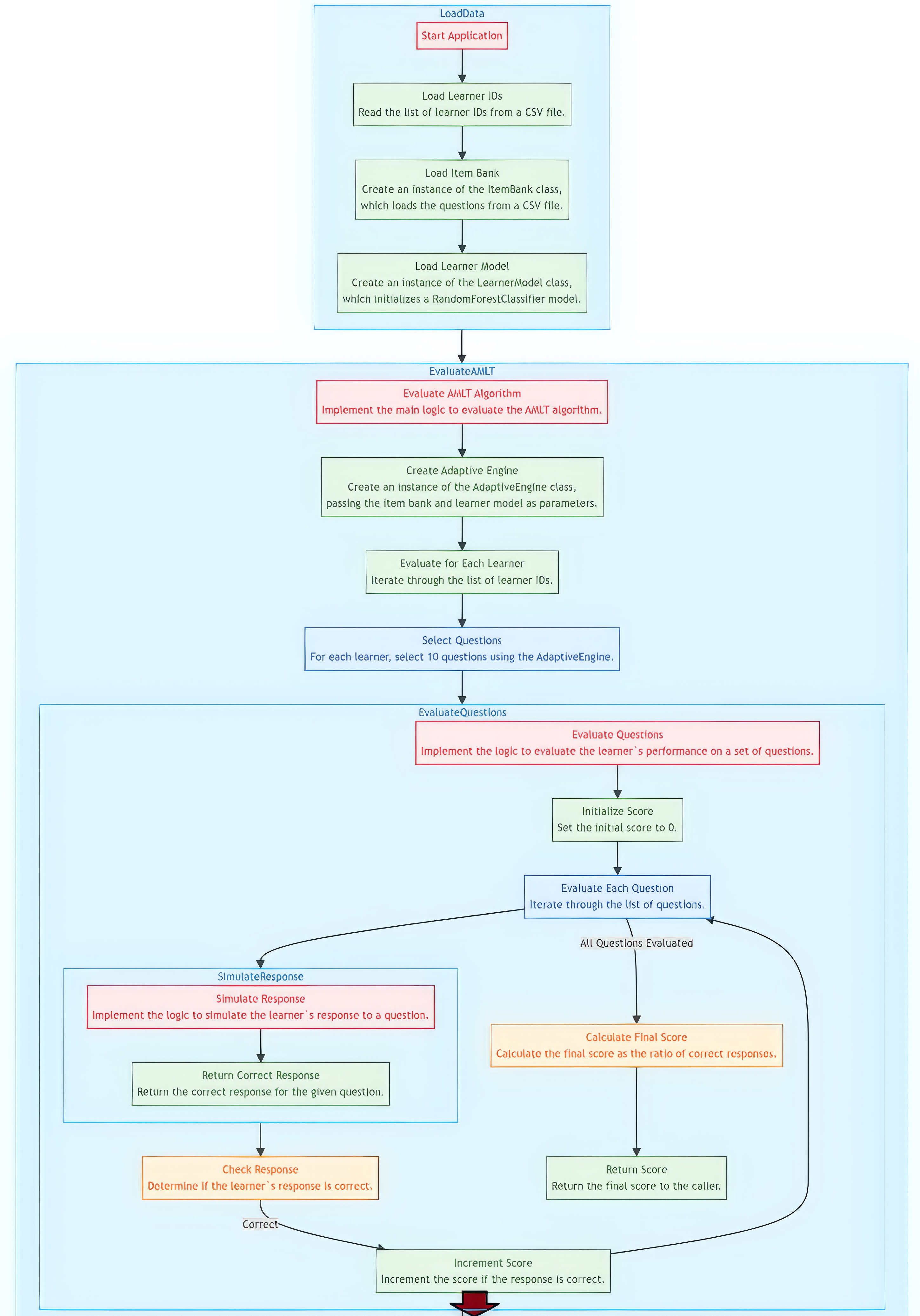

Figure 1 - AMLT algorithm as a Flowchart diagram Visualize

Note: part 1

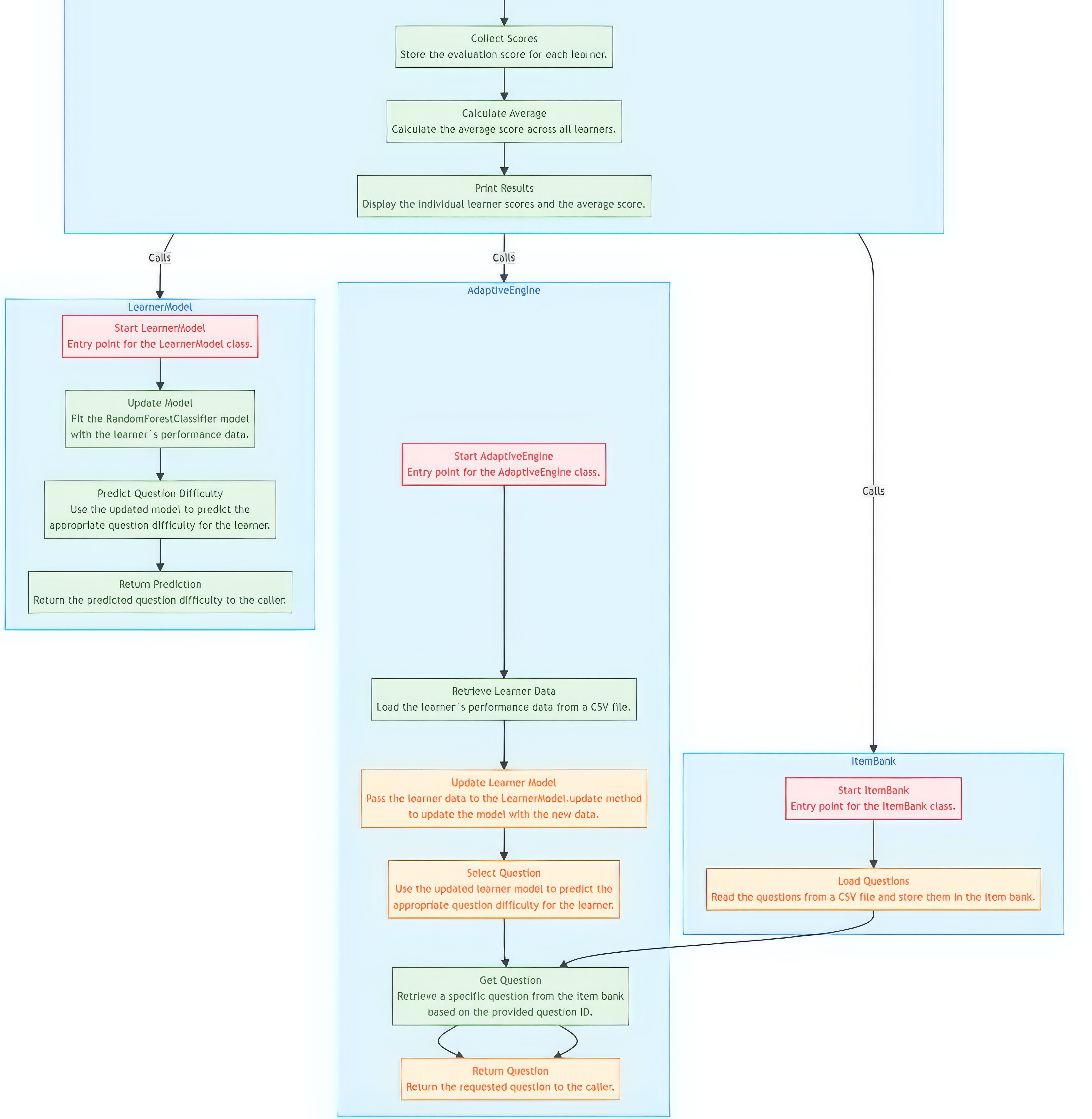

Figure 2 - AMLT algorithm as a Flowchart diagram Visualize

Note: part 2

1. Load Data. This is the portion of the code setting up the AMLT algorithm with the loading of all data required, including learner IDs, question pool, and learner profiles.

2. Evaluate AMLT. This is a part running the AMLT algorithm. It sets up an AdaptiveEngine; for each learner, it picks questions and verifies the answers, gathering scores for computing the average and showing the result.

3. Adaptive Engine. This component explains what the AdaptiveEngine does. The AdaptiveEngine retrieves learner performance and updates the user profile accordingly, selecting the next best question based on this profile and retrieving that question from the question pool.

4. Learner Model. It clearly spells out what the Learner Model does — updates the developed RandomForestClassifier with the given learner performance data to predict their future performance.

5. Item Bank. The component describes the implementation of the ItemBank class in the diagram. It reads questions from a CSV file and has a function to get a particular question from the bank.

6. Score Questions. This part of the map establishes the overall process for scoring how well a learner does on a set of questions. It starts at zero, then cycles through each question, pretends what the learner might have answered, looks at whether the answer is correct, and finally calculates the total score.

7. Simulate Response. This part of the diagram simulates the response of the learner to a question. This simply returns the correct response to the question. The Mermaid flowchart details a full and well-rounded view as far as how the AMLT algorithm is implemented goes, showing the interaction of the different parts involved and the flow of control and data through the system. The notes posted alongside each part of the chart indicate what it does and how.

4.2. Sample Dataset

Sample dataset in CSV format that can be used for training and evaluation of the AMLT algorithm:

learner_id,question_id,response,correct,difficulty,topic

1,1,A,1,0.5,Math

1,2,B,0,0.7,Science

1,3,C,1,0.3,English

2,1,B,0,0.5,Math

2,2,A,1,0.7,Science

2,3,D,0,0.3,English

4.3. This dataset contains the following columns

1. Learner_id: Learner identifier for each learner.

2. Question_id: A unique identifier for each question.

3. Response: Response that the learner provided to the question: A, B, C, or D.

4. Correct: Whether the question was answered correctly by the learner, where 1 is correct and 0 is wrong.

5. Difficulty: question difficulty, ranging from 0.1 to 1.

6. Topic: the topic of the question.

5. Conclusion

It proves that AMLT works for personalized assessment and better learning outcomes within mobile learning. Machine learning algorithms mean AMLT is able to adapt to learners' knowledge, learning style, and pace for a better learning experience.

This paper proposes an adaptive mobile learning testing approach with machine learning algorithms to create tailored assessments that might improve learning outcomes. In this scheme, the AMLT algorithm will combine the item response theory with machine learning techniques, and it would be adaptive according to the learner's knowledge level, style, and pace of learning. Results obtained in this study prove the potential of AMLT to enhance learning and improve results in a mobile learning environment. For the future, research directions could include:

1. Item Bank Expansion. Grow and diversify the item bank to accommodate more learners. Address more subjects for a greater educational impact.

2. Learner Model Improvement. The enhancement of the learner model to contain many more factors will serve to capture aspects such as learning styles, motivation, and previous knowledge.

3. Integration with Other Technologies. AMLT integration with other technologies, such as natural language processing, and gamification engrafting to provide comprehensive and more engaging learning experiences.

4. Large-Scale Evaluation. There will be large-scale evaluation exercises to confirm the effectiveness of AMLT in real-world settings for mobile learning environments.